Biography

Hey! I’ll soon be graduating as a Machine Learning D.phil student at the OATML group at University of Oxford. I’m supervised by Prof. Yarin Gal.

I’m currently working on designing methods, architectures and benchmarks to enable transformer-based agents to do long horizon tasks by creating and accessing memories, through large-scale RL.

Some of the topics I have done research on over the previous three years are:

- Leveraging advances in visual diffusion modeling for robotics

- Mechanistic interpretability in transformer-based world models

- Training generative world models for video games and robotics, and

- Causally-correct, sample-efficient learning from imbalanced data.

I also collaborated closely with researchers from Toyota Research (Adrien Gaidon and Rowan McAllister) on topics related to causal robot learning.

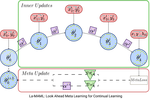

Prior to my Ph.D, I was a deep learning researcher at Wayve, exploring reinforcment learning algorithms on autonomous driving data. I graduated from a Machine Learning Research Master’s at Mila (Sept 2020) where I did research on meta learning, continual learning and inverse reinforcement learning. I was also an ED&I Fellow with the MPLS department at the University of Oxford in 2022-2023 cohort.

Download my resumé.

Interests

- Policy Learning, Reinforcement Learning

- Diffusion modeling

- Continual Learning, Meta Learning

- Memory-augmented models

Education

D.Phil Machine Learning (AIMS CDT), 2024

University of Oxford

Research Master's in Machine Learning, 2020

Montreal Institute of Learning Algorithms

B.Tech in Maths and Computing (Applied Mathematics), 2016

Delhi Technological University (DTU/DCE)